A Fiji Scripting Tutorial

|

Most of what you want to do with an image exists in Fiji. This tutorial will provide you with the general idea of how Fiji works: how are its capabilities organized, and how can they be composed into a program. To learn about Fiji, we'll start the hard way: by programming. This tutorial will teach you both python and Fiji. |

|

||||||

Index

|

Tutorial created by Albert Cardona. Zurich, 2010-11-10. All source code is under the Public Domain. Remember: Fiji is just ImageJ (batteries included). See also:

Thanks to:

|

||||||

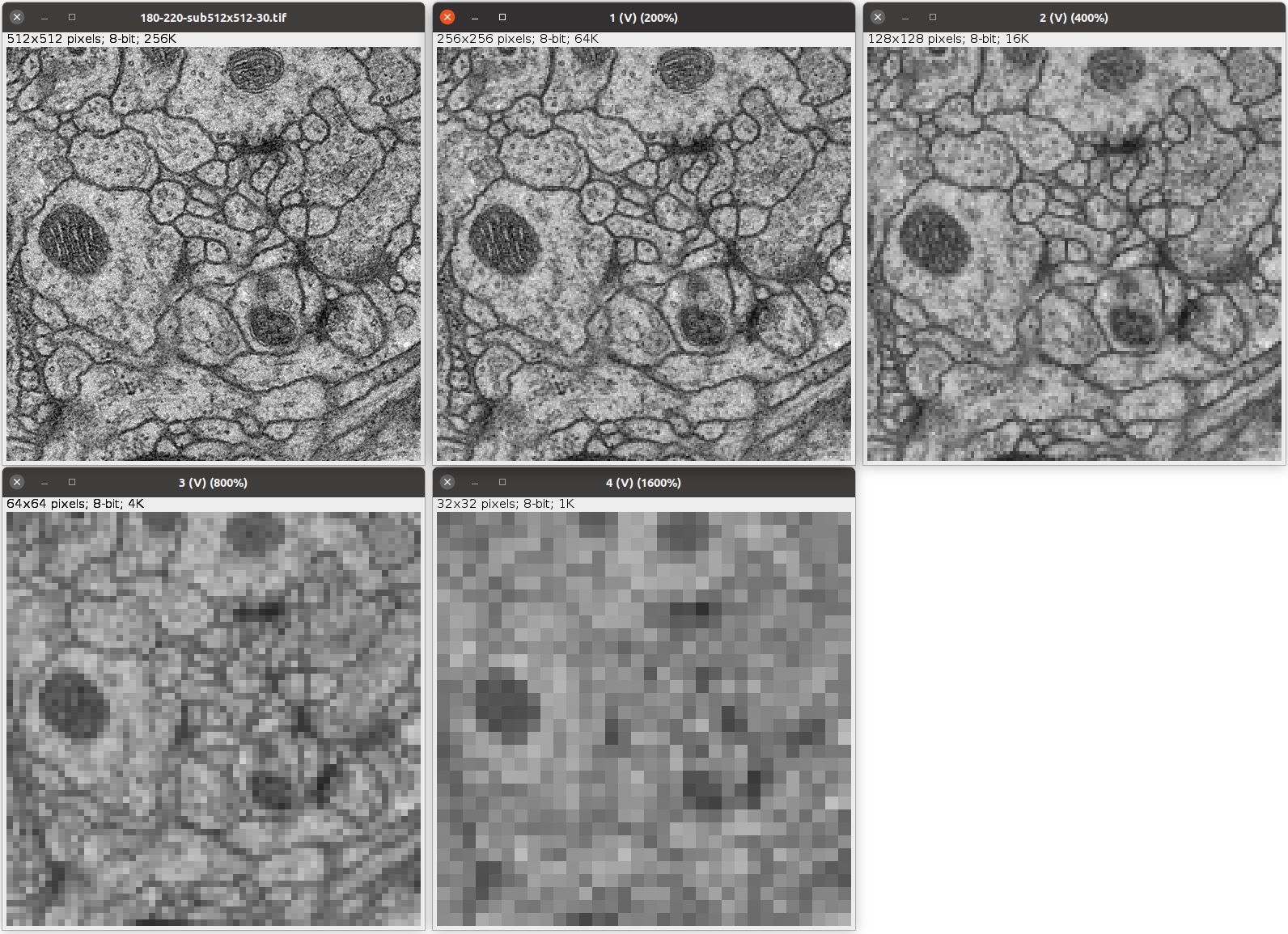

1. Getting startedOpen the Script Editor by choosing "File - New - Script". |

|

||||||

|

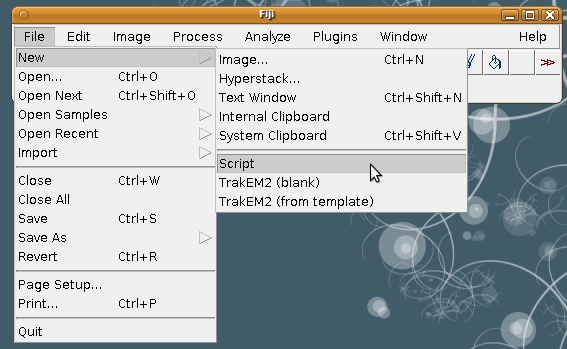

Alternatively, use the Command finder: Push 'l' (letter L) and then start typing "scri". You will see a list of Fiji commands, getting shorter the more letters you type. When the "Script Editor" command is visible, push the up arrow to go to it, and then push return to launch it. (Or double-click on it.) The Command Finder is useful for invoking any Fiji command. |

|

||||||

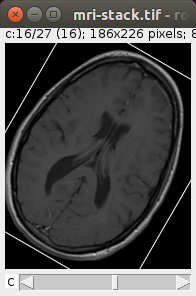

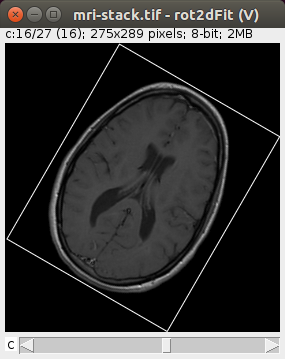

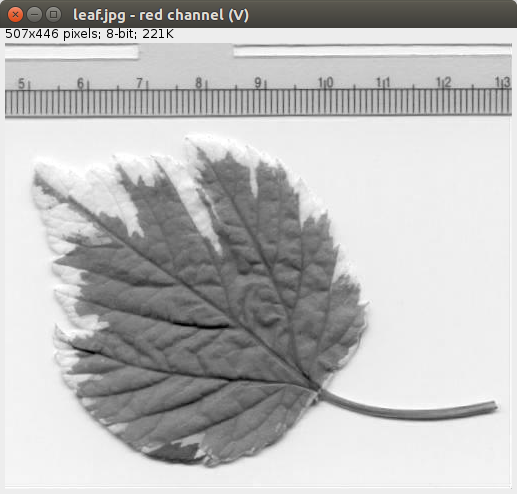

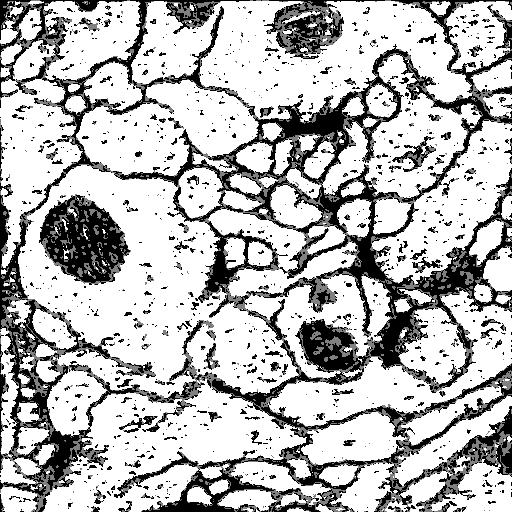

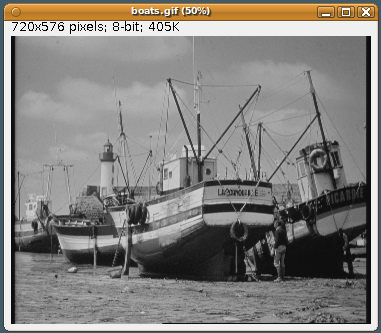

2. Your first Fiji scriptWe'll need an image to work on: please open any image. |

|

||||||

|

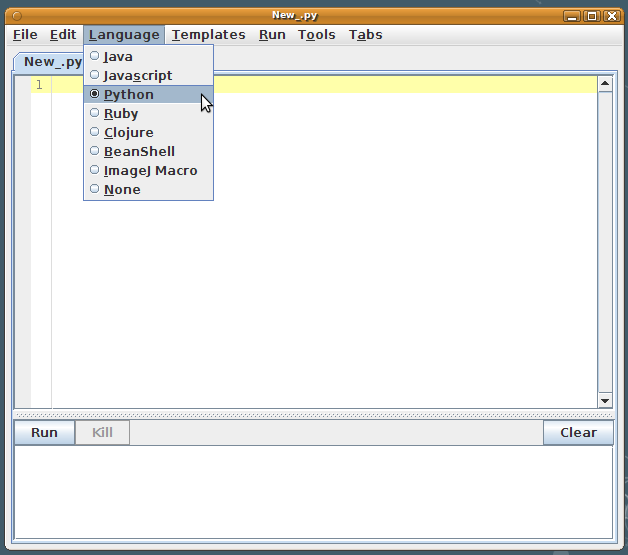

This tutorial will use the programming language Python 2.7. We start by telling the opened Script Editor what language you want to write the script on: choose "Language - Python". |

|

||||||

|

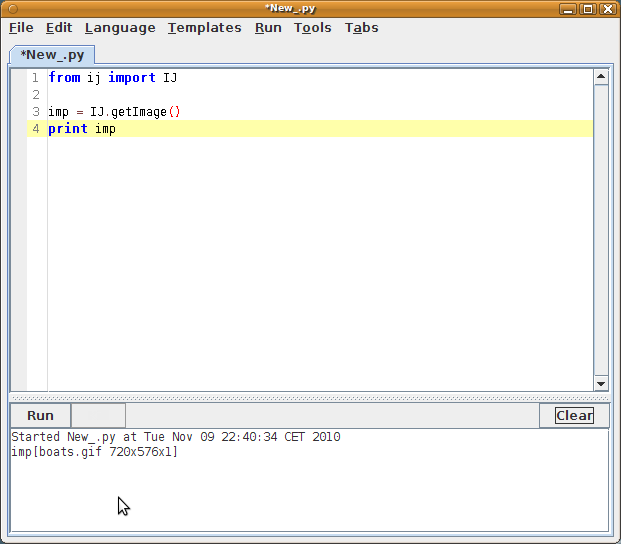

Type in what you see on the image to the right into the Script Editor, and then push "Run", or choose "Run - Run", or control+R (command+R in MacOSX). Line by line:

So what is "imp"? "imp" is a commonly used name to refer to an instance of an ImagePlus. The ImagePlus is one of ImageJ's abstractions to represent an image. Every image that you open in ImageJ is an instance of ImagePlus. |

|

||||||

|

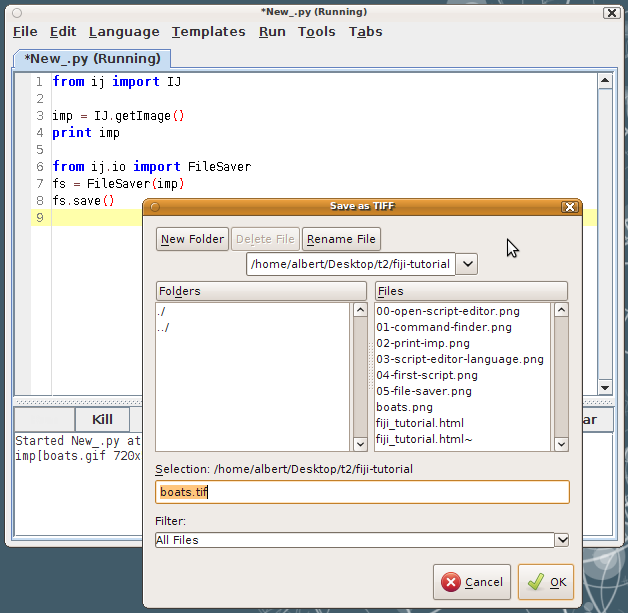

Saving an image with a file dialog The first action we'll do on our image is to save it. To do that, you could call "File - Save" from the menus. |

|

||||||

|

Saving an image directly to a file The point of running a script is to avoid human interaction. Notice that the '#' sign defines comments. In python, any text after a '#' is not executed. |

from ij import IJ

from ij.io import FileSaver

imp = IJ.getImage()

fs = FileSaver(imp)

# A known folder to store the image at:

folder = "/home/albert/Desktop/t2/fiji-tutorial"

filepath = folder + "/" + "boats.tif"

fs.saveAsTiff(filepath):

|

||||||

|

Saving an image ... checking first if it's a good idea. The FileSaver will overwrite whatever file exists at the file path that you give it. That is not always a good idea! Here, we write the same code but checking first:

And finally, if all expected preconditions hold, then we place the call to saveAsTiff. This script introduced three new programming items:

|

from ij import IJ

from ij.io import FileSaver

from os import path

imp = IJ.getImage()

fs = FileSaver(imp)

# A known folder to store the image at:

folder = "/home/albert/Desktop/t2/fiji-tutorial"

# Test if the folder exists before attempting to save the image:

if path.exists(folder) and path.isdir(folder):

print "folder exists:", folder

filepath = path.join(folder, "boats.tif") # Operating System-specific

if path.exists(filepath):

print "File exists! Not saving the image, would overwrite a file!"

elif fs.saveAsTiff(filepath):

print "File saved successfully at ", filepath

else:

print "Folder does not exist or it's not a folder!"

|

||||||

3. Inspecting properties and pixels of an imageAn image in ImageJ or Fiji is, internally, an instance of ImagePlus. In python, accessing fields of an instance is straightforward: just add a dot '.' between the variable "imp" and the field "title" to access. In the Fiji API documentation, if you don't see a specific field like width in a particular class, but there is a getWidth method, then from python they are one and the same. The image type Notice how we created a dictionary to hold key/value pairs: of the image type versus a text representation of that type. This dictionary (also called map or table in other programming languages) then lets us ask it for a specific image type (such as ImagePlus.GRAY8), and we get back the corresponding text, such as "8-bit". You may have realized by now that the ImagePlus.getType() (or what is the same in python: "imp.type") returns us any of the controled values of image type that an ImagePlus instance can take. These values are GRAY8, GRAY16, GRAY32, COLOR_RGB, and COLOR_256. What is the image type? It's the kind of pixel data that the image holds. It could be numbers from 0 to 255 (what fits in an 8-bit range), or from 0 to 65536 (values that fit in a 16-bit range), or could be three channels of 8-bit values (an RGB image), or floating-point values (32-bit). The COLOR_256 indicates an 8-bit image that has an associated look-up table: each pixel value does not represent an intensity, but rather it's associated with a color. The table of values versus colors is limited to 256, and hence these images may not look very well. For image processing, you should avoid COLOR_256 images (also known as "8-bit color" images). These images are meant for display in the web in ".gif" format, but have been superseeded by JPEG or PNG. The GRAY_8 ("8-bit"), GRAY_16 ("16-bit") and GRAY_32 ("32-bit") images may also be associated with a look-up table. For example, in a "green" look-up table on an 8-bit image, values of zero are black, values of 128 are darkish green, and the maximum value of 255 is fully pure green. |

from ij import IJ, ImagePlus

# Grab the last activated image

imp = IJ.getImage()

# Print image details

print "title:", imp.title

print "width:", imp.width

print "height:", imp.height

print "number of pixels:", imp.width * imp.height

print "number of slices:", imp.getNSlices()

print "number of channels:", imp.getNChannels()

print "number of time frames:", imp.getNFrames()

types = {ImagePlus.COLOR_RGB : "RGB",

ImagePlus.GRAY8 : "8-bit",

ImagePlus.GRAY16 : "16-bit",

ImagePlus.GRAY32 : "32-bit",

ImagePlus.COLOR_256 : "8-bit color"}

print "image type:", types[imp.type]

Started New_.py at Wed Nov 10 14:57:46 CET 2010

title: boats.gif

width: 720

height: 576

number of pixels: 414720

number of slices: 1

number of channels: 1

number of time frames: 1

image type: 8-bit

|

||||||

|

Obtaining pixel statistics of an image (and your first function) ImageJ / Fiji offers an ImageStatistics class that does all the work for us. Notice how we import the ImageStatistics namespace as "IS", i.e. we alias it--it's too long to type! The options variable is the bitwise-or combination of three different static fields of the ImageStatistics class. The final options is an integer that has specific bits set that indicate mean, median and min and max values. |

from ij import IJ

from ij.process import ImageStatistics as IS

# Grab the active image

imp = IJ.getImage()

# Get its ImageProcessor

ip = imp.getProcessor()

options = IS.MEAN | IS.MEDIAN | IS.MIN_MAX

stats = IS.getStatistics(ip, options, imp.getCalibration())

# print statistics on the image

print "Image statistics for", imp.title

print "Mean:", stats.mean

print "Median:", stats.median

print "Min and max:", stats.min, "-", stats.max

Started New_.py at Wed Nov 10 19:54:37 CET 2010

Image statistics for boats.gif

Mean: 120.026837384

Median: 138.0

Min and max: 3.0 - 220.0

|

||||||

|

Now, how about obtaining statistics for a lot of images? (in other words, batch processing).

So we define a folder that contains our images, and we loop the list of filenames that it has. For every filename that ends with ".tif", we load it as an ImagePlus, and handle it to the getStatistics function, which returns us the mean, median, and min and max values. (Note: if the images are stacks, use StackStatistics instead.) This script introduces a few new concepts:

See also the python documentation page on control flow, with explanations on the keywords if, else and elif, the for loop keyword and the break and continue keywords, defining a function with def, functions with variable number of arguments, anonymous functions (with the keyword lambda), and guidelines on coding style. |

from ij import IJ

from ij.process import ImageStatistics as IS

import os

options = IS.MEAN | IS.MEDIAN | IS.MIN_MAX

def getStatistics(imp):

""" Return statistics for the given ImagePlus """

ip = imp.getProcessor()

stats = IS.getStatistics(ip, options, imp.getCalibration())

return stats.mean, stats.median, stats.min, stats.max

# Folder to read all images from:

folder = "/home/albert/Desktop/t2/fiji-tutorial"

# Get statistics for each image in the folder

# whose file extension is ".tif":

for filename in os.listdir(folder):

if filename.endswith(".tif"):

print "Processing", filename

imp = IJ.openImage(os.path.join(folder, filename))

if imp is None:

print "Could not open image from file:", filename

continue

mean, median, min, max = getStatistics(imp)

print "Image statistics for", imp.title

print "Mean:", mean

print "Median:", median

print "Min and max:", min, "-", max

else:

print "Ignoring", filename

|

||||||

|

Iterating pixels Iterating pixels is considered a low-level operation that you would seldom, if ever, have to do. But just so you can do it when you need to, here are various ways to iterate all pixels in an image. The three iteration methods:

The last should be your preferred method. There's the least opportunity for introducting an error, and it is very concise. Regarding the example given, keep in mind:

|

from ij import IJ

from sys.float_info import max as MAX_FLOAT

# Grab the active image

imp = IJ.getImage()

# Grab the image processor converted to float values

# to avoid problems with bytes

ip = imp.getProcessor().convertToFloat() # as a copy

# The pixels points to an array of floats

pixels = ip.getPixels()

print "Image is", imp.title, "of type", imp.type

# Obtain the minimum pixel value

# Method 1: the for loop, C style

minimum = MAX_FLOAT

for i in xrange(len(pixels)):

if pixels[i] < minimum:

minimum = pixels[i]

print "1. Minimum is:", minimum

# Method 2: iterate pixels as a list

minimum = MAX_FLOAT

for pix in pixels:

if pix < minimum:

minimum = pix

print "2. Minimum is:", minimum

# Method 3: apply the built-in min function

# to the first pair of pixels,

# and then to the result of that and the next pixel, etc.

minimum = reduce(min, pixels)

print "3. Minimum is:", minimum

Started New_.py at Wed Nov 10 20:49:31 CET 2010

Image is boats.gif of type 0

1. Minimum is: 3.0

2. Minimum is: 3.0

3. Minimum is: 3.0

|

||||||

|

On iterating or looping lists or collections of elements Ultimately all operations that involve iterating a list or a collection of elements can be done with the for looping construct. But in almost all occasions the for is not the best choice, neither regarding performance nor in clarity or conciseness. The latter is important to minimize the amount of errors that we may possibly introduce without noticing. There are three kinds of operations to perform on lists or collections: map, reduce, and filter. We show them here along with the equivalent for loop. |

|||||||

|

A map operation takes a list of length N and returns another list also of length N, with the results of applying a function (that takes a single argument) to every element of the original list. For example, suppose you want to get a list of all images open in Fiji. With the for loop, we have to create first a list explictly and then append one by one every image. With list comprehension, the list is created directly and the logic of what goes in it is placed inside the square brackets--but it is still a loop. That is, it is still a sequential, unparallelizable operation. With the map, we obtain the list automatically by executing the function WM.getImage to every ID in the list of IDs. While this is a trivial example, suppose you were executing a complex operation on every element of a list or an array. If you were to redefine the map function to work in parallel, suddenly any map operation in your program will run faster, without you having to modify a single line of tested code! |

from ij import WindowManager as WM

# Method 1: with a 'for' loop

images = []

for id in WM.getIDList():

images.append(WM.getImage(id))

# Method 2: with list comprehension

images = [WM.getImage(id) for id in WM.getIDList()]

# Method 3: with a 'map' operation

images = map(WM.getImage, WM.getIDList())

|

||||||

|

A filter operation takes a list of length N and returns a shorter list, with anywhere from 0 to N elements. Only those elements of the original list that pass a test are placed in the new, returned list. For example, suppose you want to find the subset of opened images in Fiji whose title match a specific criterium. With the for loop, we have to create a new list first, and then append elements to that list as we iterate the list of images. The second variant of the for loop uses list comprehension. The code is reduced to a single short line, which is readable, but is still a python loop (with potentially lower performance). With the filter operation, we get the (potentially) shorter list automatically. The code is a single short line, instead of 4 lines! |

from ij import WindowManager as WM

# A list of all open images

imps = map(WM.getImage, WM.getIDList())

def match(imp):

""" Returns true if the image title contains the word 'cochlea'"""

return imp.title.find("cochlea") > -1

# Method 1: with a 'for' loop

# (We have to explicitly create a new list)

matching = []

for imp in imps:

if match(imp):

matching.append(imp)

# Method 2: with list comprehension

matching = [imp for imp in imps if match(imp)]

# Method 3: with a 'filter' operation

matching = filter(match, imps)

|

||||||

|

A reduce operation takes a list of length N and returns a single value. This value is composed from applying a function that takes two arguments to the first two elements of the list, then to the result of that and the next element, etc. Optionally an initial value may be provided, so that the cycle starts with that value and the first element of the list. For example, suppose you want to find the largest image, by area, from the list of all opened images in Fiji. With the for loop, we have to we have to keep track of which was the largest area in a pair of temporary variables. And even check whether the stored largest image is null! We could have initizalized the largestArea variable to the first element of the list, and then start looping at the second element by slicing the first element off the list (with "for imp in imps[1:]:"), but then we would have had to check if the list contains at least one element. With the reduce operation, we don't need any temporary variables. All we need is to define a helper function (which could have been an anonymous lambda function, but we defined it explicitly for extra clarity and reusability). |

from ij import IJ

from ij import WindowManager as WM

# A list of all open images

imps = map(WM.getImage, WM.getIDList())

def area(imp):

return imp.width * imp.height

# Method 1: with a 'for' loop

largest = None

largestArea = 0

for imp in imps:

a = area(imp)

if largest is None:

largest = imp

largestArea = a

else:

if a > largestArea:

largest = imp

largestArea = a

# Method 2: with a 'reduce' operation

def largestImage(imp1, imp2):

return imp1 if area(imp1) > area(imp2) else imp2

largest = reduce(largestImage, imps)

|

||||||

|

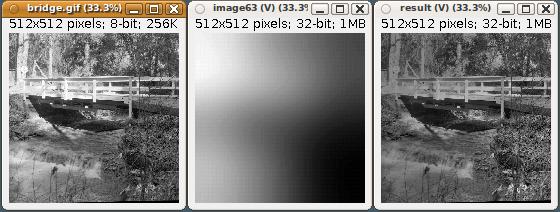

Subtract the min value to every pixel First we obtain the minimum pixel value, using the reduce method explained just above. Then we subtract this minimum value to every pixel. We have two ways to do it:

With the first method, since the pixels array was already a copy (notice we called convertToFloat() on the ImageProcessor), we can use it to create a new ImagePlus with it without any unintended consequences. With the second method, the new list of pixels must be given to a new FloatProcessor instance, and with it, a new ImagePlus is created, of the same dimensions as the original. |

from ij import IJ, ImagePlus

from ij.process import FloatProcessor

imp = IJ.getImage()

ip = imp.getProcessor().convertToFloat() # as a copy

pixels = ip.getPixels()

# Apply the built-in min function

# to the first pair of pixels,

# and then to the result of that and the next pixel, etc.

minimum = reduce(min, pixels)

# Method 1: subtract the minim from every pixel,

# in place, modifying the pixels array

for i in xrange(len(pixels)):

pixels[i] -= minimum

# ... and create a new image:

imp2 = ImagePlus(imp.title, ip)

# Method 2: subtract the minimum from every pixel

# and store the result in a new array

pixels3 = map(lambda x: x - minimum, pixels)

# ... and create a new image:

ip3 = FloatProcessor(ip.width, ip.height, pixels3, None)

imp3 = ImagePlus(imp.title, ip3)

# Show the images in an ImageWindow:

imp2.show()

imp3.show()

|

||||||

|

Reduce a pixel array to a single value: count pixels above a threshold Suppose you want to analyze a subset of pixels. For example, find out how many pixels have a value over a certain threshold. The reduce built-in function is made just for that. It takes a function with two arguments (the running count and the next pixel); the list or array of pixels; and an initial value (in this case, zero) for the first argument (the "count'), and will return a single value (the total count). In this example, we computed first the mean pixel intensity, and then filtered all pixels for those whose value is above the mean. Notice that we compute the mean by using the convenient built-in function sum, which is able to add all numbers contained in any kind of collection (be it a list, a native array, a set of unique elements, or the keys of a dictionary). We could imitate the built-in sum function with reduce(lambda s, x: s + x, pixels), but paying a price in performance. Notice we are using anonymous functions again (that is, functions that lack a name), declared in place with the lambda keyword. A function defined with def would do just fine as well. |

from ij import IJ

# Grab currently active image

imp = IJ.getImage()

ip = imp.getProcessor().convertToFloat()

pixels = ip.getPixels()

# Compute the mean value (sum of all divided by number of pixels)

mean = sum(pixels) / len(pixels)

# Count the number of pixels above the mean

n_pix_above = reduce(lambda count, a: count + 1 if a > mean else count, pixels, 0)

print "Mean value", mean

print "% pixels above mean:", n_pix_above / float(len(pixels)) * 100

Started New_.py at Thu Nov 11 01:50:49 CET 2010

Mean value 120.233899981

% pixels above mean: 66.4093846451

|

||||||

|

Another useful application of filtering pixels by their value: finding the coordinates of all pixels above a certain value (in this case, the mean), and then calculating their center of mass. The filter built-in function is made just for that. The indices of the pixels whose value is above the mean are collected in a list named "above", which is created by filtering the indices of all pixels (provided by the built-in function xrange). The filtering is done by an anonymous function declared with lambda, with a single argument: the index i of the pixel. Here, note that in ImageJ, the pixels of an image are stored in a linear array. The length of the array is width * height, and the pixels are stored as concatenated rows. Therefore, the modulus of dividing the index of a pixel by the width the image provides the X coordinate of a pixel. Similarly, the integer division of the index of a pixel by the width provides the Y coordinate. To compute the center of mass, there are two equivalent methods. The C-style method with a for loop, with every variable being declared prior to the loop, and then modified at each loop iteration and, after the loop, dividing the sum of coordinates by the number of coordinates (the length of the "above" list). For this example, this is the method with the best performance.The second method computes the X and Y coordinates of the center of mass with a single line of code for each. Notice that both lines are nearly identical, differing only in the body of the function mapped to the "above" list containing the indices of the pixels whose value is above the mean. While, in this case, the method is less performant due to repeated iteration of the list "above", the code is shorter, easier to read, and with far less opportunities for introducing errors. If the actual computation was far more expensive than the simple calculation of the coordinates of a pixel given its index in the array of pixels, this method would pay off for its clarity. |

from ij import IJ

# Grab the currently active image

imp = IJ.getImage()

ip = imp.getProcessor().convertToFloat()

pixels = ip.getPixels()

# Compute the mean value

mean = sum(pixels) / len(pixels)

# Obtain the list of indices of pixels whose value is above the mean

above = filter(lambda i: pixels[i] > mean, xrange(len(pixels)))

print "Number of pixels above mean value:", len(above)

# Measure the center of mass of all pixels above the mean

# The width of the image, necessary for computing the x,y coordinate of each pixel

width = imp.width

# Method 1: with a for loop

xc = 0

yc = 0

for i in above:

xc += i % width # the X coordinate of pixel at index i

yc += i / width # the Y coordinate of pixel at index i

xc = xc / len(above)

yc = yc / len(above)

print xc, yc

# Method 2: with sum and map

xc = sum(map(lambda i: i % width, above)) / len(above)

yc = sum(map(lambda i: i / width, above)) / len(above)

print xc, yc

|

||||||

|

The third method pushes the functional approach too far. While written in a single line, that doesn't mean it is clearer to read: it's intent is obfuscated by starting from the end: the list comprehension starts off by stating that each element (there are only two) of the list resulting from the reduce has to be divided by the length of the list of pixels "above", and only then we learn than the collection being iterated is the array of two coordinates, created at every iteration over the list "above", containing the sum of all coordinates for X and for Y. Notice that the reduce is invoked with three arguments, the third one being the list [0, 0] containing the initialization values of the sums. Confusing! Avoid writing code like this. Notice as well that, by creating a new list at every iteration step, this method is the least performant of all. The fourth method is a clean up of the third method. Notice that we import the partial function from the functools package. With it, we are able to create a version of the "accum" helper function that has a frozen "width" argument (also known as currying a function). In this way, the "accum" function is seen by the reduce as a two-argument function (which is what reduce needs here). While we regain the performance of the for loop, notice that now the code is just as long as with the for loop. The purpose of writing this example is to illustrate how one can write python code that doesn't use temporary variables, these generally being potential points of error in a computer program. It is always better to write lots of small functions that are easy to read, easy to test, free of side effects, documented, and that then can be used to assemble our program. |

# (Continues from above...)

# Method 3: iterating the list "above" just once

xc, yc = [d / len(above) for d in

reduce(lambda c, i: [c[0] + i % width, c[1] + i / width], above, [0, 0])]

print xc, yc

# Method 4: iterating the list "above" just once, more clearly and performant

from functools import partial

def accum(width, c, i):

""" Accumulate the sum of the X,Y coordinates of index i in the list c."""

c[0] += i % width

c[1] += i / width

return c

xy, yc = [d / len(above) for d in reduce(partial(accum, width), above, [0, 0])]

print xc, yc

|

||||||

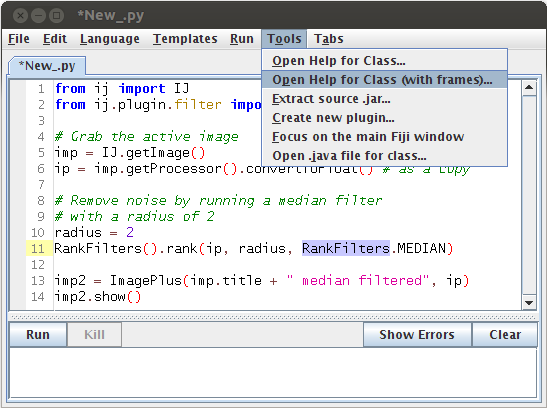

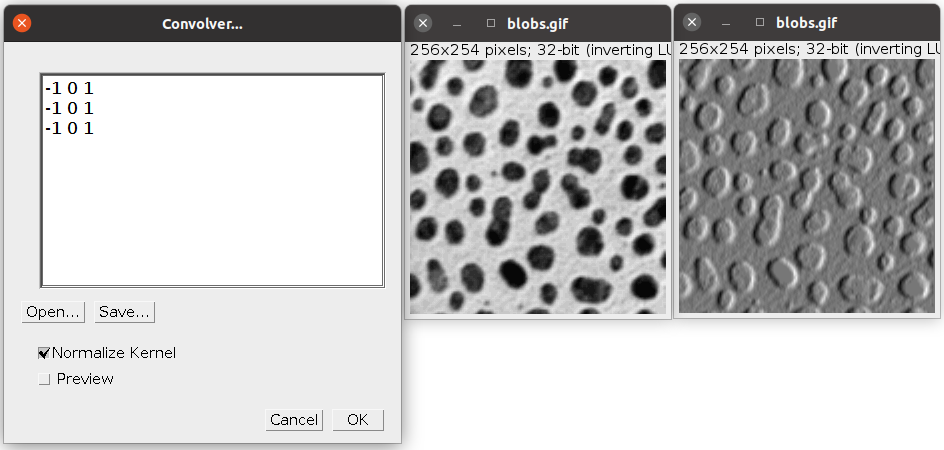

4. Running ImageJ / Fiji plugins on an ImagePlusHere is an example plugin run programmatically: a median filter applied to the currently active image. The median filter, along with the mean, minimum, maximum, variance, remove outliers and despeckle menu commands, are implemented in the RankFilters class. |

from ij import IJ, ImagePlus

from ij.plugin.filter import RankFilters

# Grab the active image

imp = IJ.getImage()

ip = imp.getProcessor().convertToFloat() # as a copy

# Remove noise by running a median filter

# with a radius of 2

radius = 2

RankFilters().rank(ip, radius, RankFilters.MEDIAN)

imp2 = ImagePlus(imp.title + " median filtered", ip)

imp2.show()

|

||||||

|

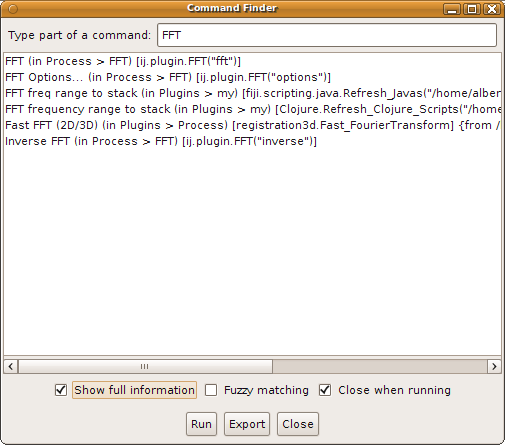

Finding the class that implements a specific ImageJ command When starting ImageJ/Fiji programming, the problem is not so much how to run a plugin on an image, as it is to find out which class implements which plugin. Here is a simple method to find out, via the Command Finder:

Notice that the plugin class comes with some text. For example:

FFT (in Process > FFT) [ij.plugin.FFT("fft")]

Inverse FFT (in Process > FFT) [ij.plugin.FFT("inverse")]

The above two commands are implemented by a single plugin (ij.plugin.FFT) whose run method accepts, like all PlugIn, a text string specifying the action: the fft, or the inverse. |

|

||||||

|

Finding the java documentation for any class Once you have found the PlugIn class that implements a specific command, you may want to use that class directly. The information is either in the online java documentation or in the source code. How to find these?

|

|

||||||

|

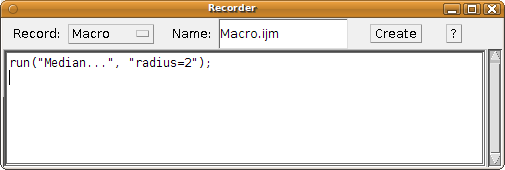

Figuring out what parameters a plugin requires To do that, we'll use the Macro Recorder. Make sure that an image is open. Then:

That is valid macro code, that ImageJ can execute. The first part is the command ("Median..."), the second part is the parameters that that command uses; in this case, just one ("radius=2"). If there were more parameters, they would be separated by spaces. Note that boolean parameters are true (the checkbox in the dialog is ticked) when present at all in the list of parameters of the macro code, and false otherwise (by default). |

|

||||||

|

Running a command on an image We can use these macro recordings to create jython code that executes a given plugin on a given image. Here is an example. Very simple! The IJ namespace has a function, run, that accepts an ImagePlus as first argument, then the name of the command to run, and then the macro-ready list of arguments that the command requires. |

from ij import IJ

# Grab the active image

imp = IJ.getImage()

# Run the median filter on it, with a radius of 2

IJ.run(imp, "Median...", "radius=2")

|

||||||

5. Creating images and regions of interest (ROIs)Create an image from scratch An ImageJ/Fiji image is composed of at least three objects:

In the example, we create an empty array of floats (see creating native arrays), and fill it in with random float values. Then we give it to a FloatProcessor instance, which is then wrapped by an ImagePlus instance. Voilà! |

from ij import ImagePlus

from ij.process import FloatProcessor

from array import zeros

from random import random

width = 1024

height = 1024

pixels = zeros('f', width * height)

for i in xrange(len(pixels)):

pixels[i] = random()

fp = FloatProcessor(width, height, pixels, None)

imp = ImagePlus("White noise", fp)

imp.show()

|

||||||

|

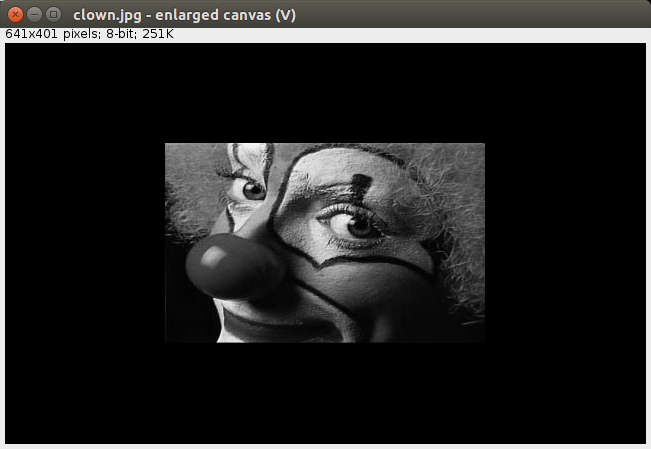

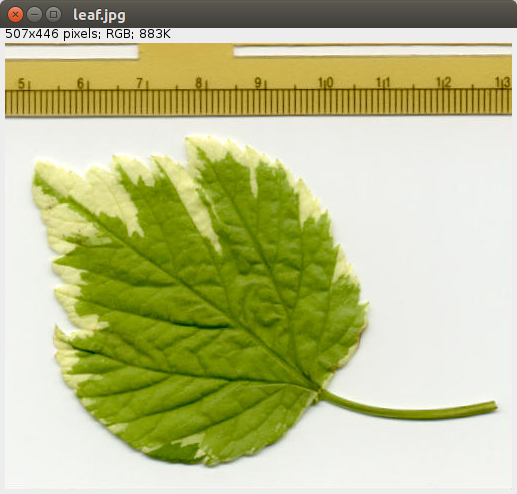

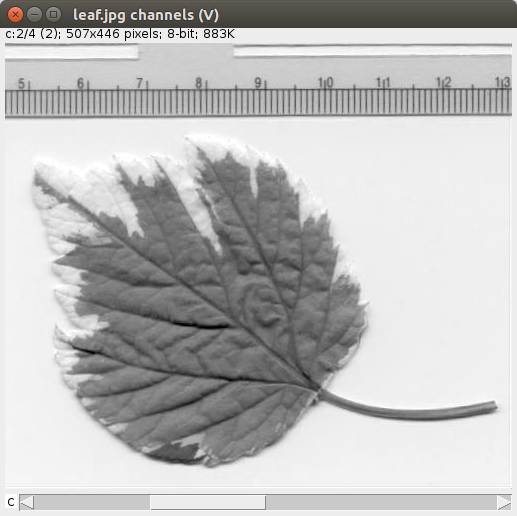

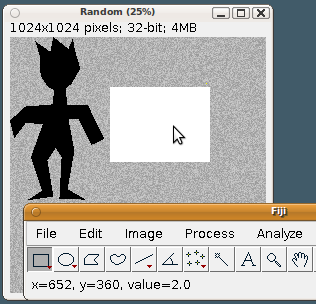

Fill a region of interest (ROI) with a given value To fill a region of interest in an image, we could iterate the pixels, find the pixels that lay within the bounds of interest, and set their values to a specified value. But that tedious and error prone. Much more effective is to create an instance of a Roi class or one of its subclasses (PolygonRoi, OvalRoi, ShapeRoi, etc.) and tell the ImageProcessor to fill that region. In this example, we create an image filled with white noise like before, and then define a rectangular region of interest in it, which is filled with a value of 2.0. The white noise is drawn from a random distribution whose values range from 0 to 1. When filling an area of the FloatProcessor with a value of 2.0, that is the new maximum value. The area with 2.0 pixel values will look white (look at the status bar):

|

from ij import IJ, ImagePlus

from ij.process import FloatProcessor

from array import zeros

from random import random

from ij.gui import Roi, PolygonRoi

# Create a new ImagePlus filled with noise

width = 1024

height = 1024

pixels = zeros('f', width * height)

for i in xrange(len(pixels)):

pixels[i] = random()

fp = FloatProcessor(width, height, pixels, None)

imp = ImagePlus("Random", fp)

# Fill a rectangular region of interest

# with a value of 2:

roi = Roi(400, 200, 400, 300)

fp.setRoi(roi)

fp.setValue(2.0)

fp.fill()

# Fill a polygonal region of interest

# with a value of -3

xs = [234, 174, 162, 102, 120, 123, 153, 177, 171,

60, 0, 18, 63, 132, 84, 129, 69, 174, 150,

183, 207, 198, 303, 231, 258, 234, 276, 327,

378, 312, 228, 225, 246, 282, 261, 252]

ys = [48, 0, 60, 18, 78, 156, 201, 213, 270, 279,

336, 405, 345, 348, 483, 615, 654, 639, 495,

444, 480, 648, 651, 609, 456, 327, 330, 432,

408, 273, 273, 204, 189, 126, 57, 6]

proi = PolygonRoi(xs, ys, len(xs), Roi.POLYGON)

fp.setRoi(proi)

fp.setValue(-3)

fp.fill(proi.getMask()) # Attention!

imp.show()

|

||||||

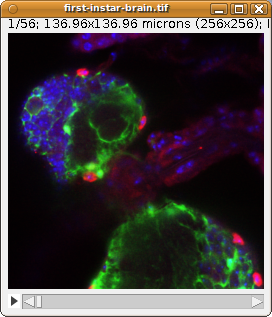

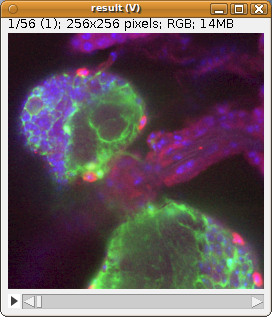

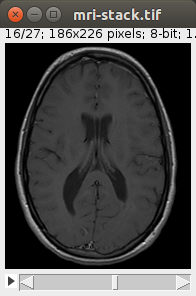

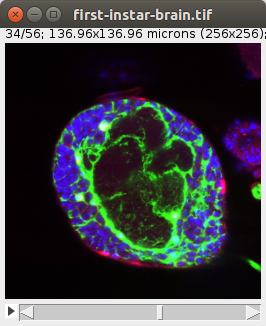

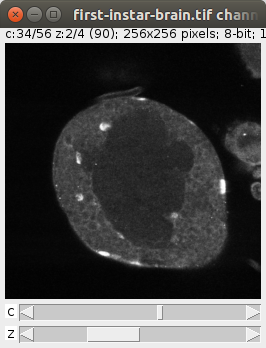

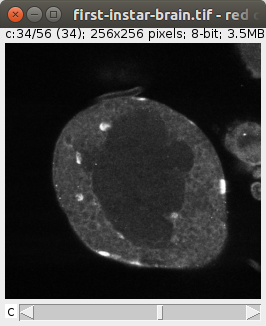

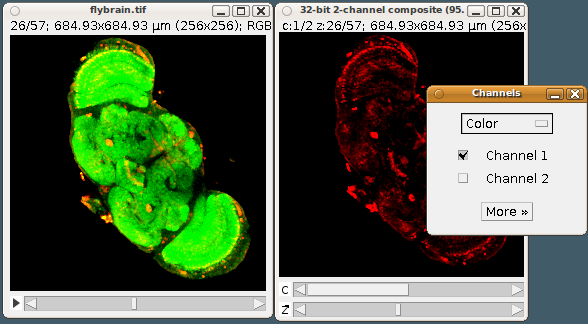

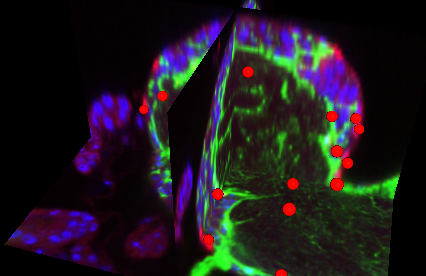

6. Create and manipulate image stacks and hyperstacksLoad a color image stack and extract its green channel First we load the stack from the web--it's the "Fly Brain" sample image. Then we iterate its slices. Each slice is a ColorProcessor: wraps an integer array. Each integer is represented by 4 bytes, and the lower 3 bytes represent, respectively, the intensity values for red, green and blue. The upper most byte is usually reserved for alpha (the inverse of transparency), but ImageJ ignores it. Dealing with low-level details like that is not necessary. The ColorProcessor has a method, toFloat, that can give us a FloatProcessor for a specific color channel. Red is 0, green is 1, and blue is 2. Representing the color channel in floats is most convenient for further processing of the pixel values--won't overflow like a byte would. In this example, all we do is collect each slice into a list of slices we named greens. Then we add all the slices to a new ImageStack, and pass it to a new ImagePlus. Then we invoke the "Green" command on that ImagePlus instance, so that a linear green look-up table is assigned to it. And we show it. |

from ij import IJ, ImagePlus, ImageStack

# Load a stack of images: a fly brain, in RGB

imp = IJ.openImage("https://imagej.nih.gov/ij/images/flybrain.zip")

stack = imp.getImageStack()

print "number of slices:", imp.getNSlices()

# A list of green slices

greens = []

# Iterate each slice in the stack

for i in xrange(1, imp.getNSlices()+1):

# Get the ColorProcessor slice at index i

cp = stack.getProcessor(i)

# Get its green channel as a FloatProcessor

fp = cp.toFloat(1, None)

# ... and store it in a list

greens.append(fp)

# Create a new stack with only the green channel

stack2 = ImageStack(imp.width, imp.height)

for fp in greens:

stack2.addSlice(None, fp)

# Create a new image with the stack of green channel slices

imp2 = ImagePlus("Green channel", stack2)

# Set a green look-up table:

IJ.run(imp2, "Green", "")

imp2.show()

|

||||||

|

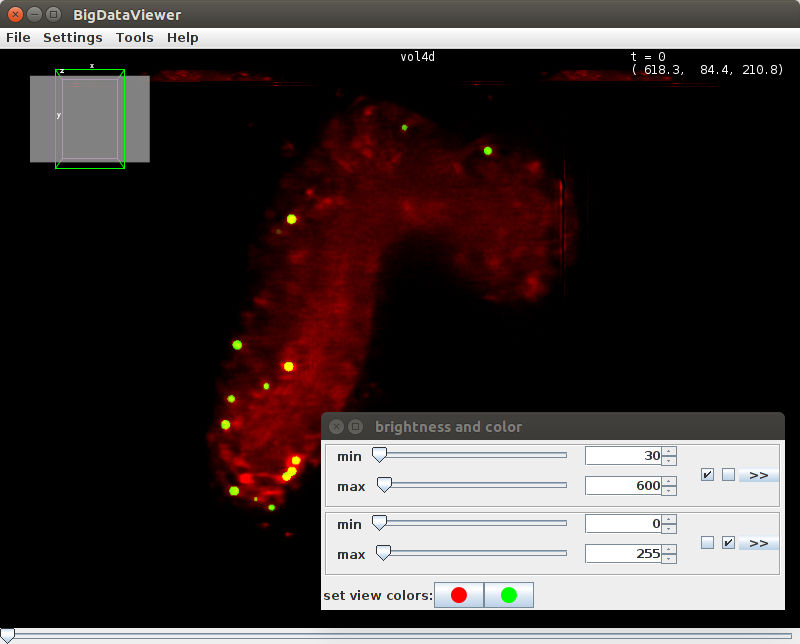

Convert an RGB stack to a 2-channel, 32-bit hyperstack We load an RGB stack--the "Fly brain" sample image, as before. Suppose we want to analyze each color channel independently: an RGB image doesn't really let us, without lots of low-level work to disentangle the different color values from each pixel value. So we convert the RGB stack to a hyperstack with two separate channels, where each channel slice is a 32-bit FloatProcessor. The first step is to create a new ImageStack instance, to hold all the slices that we'll need: one per color channel, times the number of slices. Realize that we could have 7 channels if we wanted, or 20, for each slice. As many as you want. The final step is to open the hyperstack. For that:

Open the "Image - Color - Channels Tool" and you'll see that the Composite image is set to show only the red channel--try checking the second channel as well. For a real-world example of a python script that uses hyperstacks, see the Correct_3D_drift.py script (available as the command "Plugins - Registration - Correct 3D drift"). |

from ij import IJ, ImagePlus, ImageStack, CompositeImage

# Load a stack of images: a fly brain, in RGB

imp = IJ.openImage("https://imagej.nih.gov/ij/images/flybrain.zip")

stack = imp.getImageStack()

# A new stack to hold the data of the hyperstack

stack2 = ImageStack(imp.width, imp.height)

# Convert each color slice in the stack

# to two 32-bit FloatProcessor slices

for i in xrange(1, imp.getNSlices()+1):

# Get the ColorProcessor slice at index i

cp = stack.getProcessor(i)

# Extract the red and green channels as FloatProcessor

red = cp.toFloat(0, None)

green = cp.toFloat(1, None)

# Add both to the new stack

stack2.addSlice(None, red)

stack2.addSlice(None, green)

# Create a new ImagePlus with the new stack

imp2 = ImagePlus("32-bit 2-channel composite", stack2)

imp2.setCalibration(imp.getCalibration().copy())

# Tell the ImagePlus to represent the slices in its stack

# in hyperstack form, and open it as a CompositeImage:

nChannels = 2 # two color channels

nSlices = stack.getSize() # the number of slices of the original stack

nFrames = 1 # only one time point

imp2.setDimensions(nChannels, nSlices, nFrames)

comp = CompositeImage(imp2, CompositeImage.COLOR)

comp.show()

|

||||||

7. Interacting with humans: file and option dialogs, messages, progress bars.Ask the user for a directory See DirectoryChooser. |

from ij.io import DirectoryChooser

dc = DirectoryChooser("Choose a folder")

folder = dc.getDirectory()

if folder is None:

print "User canceled the dialog!"

else:

print "Selected folder:", folder

|

||||||

|

Ask the user for a file See OpenDialog and SaveDialog. |

from ij.io import OpenDialog

od = OpenDialog("Choose a file", None)

filename = od.getFileName()

if filename is None:

print "User canceled the dialog!"

else:

directory = od.getDirectory()

filepath = directory + filename

print "Selected file path:", filepath

|

||||||

|

Show a progress bar Will show a progress bar in the Fiji window. |

from ij import IJ

imp = IJ.getImage()

stack = imp.getImageStack()

for i in xrange(1, stack.getSize()+1):

# Report progress

IJ.showProgress(i, stack.getSize()+1)

# Do some processing

ip = stack.getProcessor(i)

# ...

# Signal completion

IJ.showProgress(1)

|

||||||

|

Ask the user to enter a few parameters in a dialog There are more possibilities, but these are the basics. See GenericDialog. All plugins that use a GenericDialog are automatable. Remember how above we run a command on an image? When the names in the dialog fields match the names in the macro string, the dialog is fed in the values automatically. If a dialog field doesn't have a match, it takes the default value as defined in the dialog declaration. If a plugin was using a dialog like the one we built here, we would run it automatically like this:

args = "name='first' alpha=0.5 output='32-bit' scale=80"

IJ.run(imp, "Some PlugIn", args)

Above, leaving out the word 'optimize' means that it will use the default value (True) for it. |

from ij.gui import GenericDialog

def getOptions():

gd = GenericDialog("Options")

gd.addStringField("name", "Untitled")

gd.addNumericField("alpha", 0.25, 2) # show 2 decimals

gd.addCheckbox("optimize", True)

types = ["8-bit", "16-bit", "32-bit"]

gd.addChoice("output as", types, types[2])

gd.addSlider("scale", 1, 100, 100)

gd.showDialog()

#

if gd.wasCanceled():

print "User canceled dialog!"

return

# Read out the options

name = gd.getNextString()

alpha = gd.getNextNumber()

optimize = gd.getNextBoolean()

output = gd.getNextChoice()

scale = gd.getNextNumber()

return name, alpha, optimize, output, scale # a tuple with the parameters

options = getOptions()

if options is not None:

name, alpha, optimize, output, scale = options # unpack each parameter

print name, alpha, optimize, output, scale

|

||||||

|

A reactive dialog: using a preview checkbox Now that you know how to use ImageJ's GenericDialog, here we show how to implement the functionality of a preview checkbox. A preview checkbox is present in many plugins that use a GenericDialog for its options. Instead of waiting for the user to push the "OK" button, the image is updated dynamically, in response to user input--that is, to the user entering a numeric value into a text field, or, in this example, moving a Scrollbar. The key concept is that of a listener. All user interface (UI) elements allow us to register our own functions so that, when there is an update (e.g. the user pushes a keystroke while the UI element is activated, or the mouse is moved or clicked), our function is executed, giving us the opportunity to react to user input. Each type of UI element has its own type of listener. The Scrollbar used here allows us to register an AdjustmentListener. We implement this interface with our class ScalingPreviewer and its method adjustmentValueChanged. Given that the scrollbar can generate many events as the user drags its handle with the mouse, we are only interested in the last event, and therefore we check for event.getValueIsAdjusting() which tells us, when returning True, that by the time we are processing this event there are already more events queued, and then we terminate execution of our response with return. The next event in the queue will ask this question again, and only the last event will proceed to execute self.scale(). When the user again clicks to drag the scroll bar handle, all of this starts again. If the preview checkbox is not ticked, our listener doesn't do anything. If the preview box was not ticked, and then it is, our ScalingPreviewer, which also implements ItemListener (with its only method itemStateChanged) is also registered as a listener for the checkbox and responds, when the checkbox is selected, by calling self.scale(). When the user pushes "OK", the scaling is applied (even if it was already before; more logic would be needed to avoid this duplication when the preview checkbox is ticked). When cancelled, the original image is restored. |

# A reactive generic dialog

from ij.gui import GenericDialog

from ij import WindowManager as WM

from java.awt.event import AdjustmentListener, ItemListener

class ScalingPreviewer(AdjustmentListener, ItemListener):

def __init__(self, imp, slider, preview_checkbox):

"""

imp: an ImagePlus

slider: a java.awt.Scrollbar UI element

preview_checkbox: a java.awt.Checkbox controlling whether to

dynamically update the ImagePlus as the

scrollbar is updated, or not.

"""

self.imp = imp

self.original_ip = imp.getProcessor().duplicate() # store a copy

self.slider = slider

self.preview_checkbox = preview_checkbox

def adjustmentValueChanged(self, event):

""" event: an AdjustmentEvent with data on the state of the scroll bar. """

preview = self.preview_checkbox.getState()

if preview:

if event.getValueIsAdjusting():

return # there are more scrollbar adjustment events queued already

print "Scaling to", event.getValue() / 100.0

self.scale()

def itemStateChanged(self, event):

""" event: an ItemEvent with data on what happened to the checkbox. """

if event.getStateChange() == event.SELECTED:

self.scale()

def reset(self):

""" Restore the original ImageProcessor """

self.imp.setProcessor(self.original_ip)

def scale(self):

""" Execute the in-place scaling of the ImagePlus. """

scale = self.slider.getValue() / 100.0

new_width = int(self.original_ip.getWidth() * scale)

new_ip = self.original_ip.resize(new_width)

self.imp.setProcessor(new_ip)

def scaleImageUI():

gd = GenericDialog("Scale")

gd.addSlider("Scale", 1, 200, 100)

gd.addCheckbox("Preview", True)

# The UI elements for the above two inputs

slider = gd.getSliders().get(0) # the only one

checkbox = gd.getCheckboxes().get(0) # the only one

imp = WM.getCurrentImage()

if not imp:

print "Open an image first!"

return

previewer = ScalingPreviewer(imp, slider, checkbox)

slider.addAdjustmentListener(previewer)

checkbox.addItemListener(previewer)

gd.showDialog()

if gd.wasCanceled():

previewer.reset()

print "User canceled dialog!"

else:

previewer.scale()

scaleImageUI()

|

||||||

|

Managing UI-launched background tasks In the example above, the task to scale an image is run just like that: as soon as the UI element (the Scrollbar) notifies us--via our registered listener--, our listener function adjustmentValueChanged executes the task there and then. By doing so, the task is executed by the event dispatch thread: the same execution thread that updates the rendering of UI elements, and that processes any and all events emited from any UI element. In the case of the scaling task, it executes within milliseconds and we don't notice a thing. A task that would consume several seconds would make us realize that the UI has become unresponsive: clicking anywhere, or typing keys, would not result in any response--until our long-running task completes and then all queued events are executed, one at a time. Execution within the event dispatch thread is undesirable. Therefore, we must execute our task in a different thread. One option could be to submit our task to a thread pool that executes them as they come--but, for our example above, this would be undesirable: once we move the scrollbar, we don't care about prior positions (prior scaling values) the scrollbar held so far, and those tasks, if not yet completed, should be interrupted or not even started. A good solution is to delegate the execution to a ScheduledExecutorService, conveniently launched from Executors.newSingleThreadScheduledExecutor. This function creates a thread that periodically wakes up at fixed time intervals and then executes the function that we gave it--in this case, the ScalingPreviewer itself now implements the Runnable interface, and its only specified method, run, does the work: checks if there's anything to do relative to the last time it run, and if there is, executes it (so we pass self to scheduleWithFixedDelay, plus the timing arguments). This setup doesn't interrupt an update already in progress, but guarantees that we are always only executing one update at a time, and the latest update gets done the last--which is what matters. With this setup, the role of the adjustmentValueChanged is merely to change the state, that is, to update the state of requested_scaling_factor, which takes a negligible amount of time, therefore not interfering with the event dispatch thread's other tasks--and with the whole Fiji UI remaining responsive. Updating the state (the variables stored in the self.state dictionary) is done under a synchronization block: when a thread is accessing either getState or putState, no other thread can access either method, thanks to the function decorator make_synchronized (see below in the tutorial) that guarantees so (it's like java's synchronized reserved word). In principle we could have the self.state keys as member fields of the ScalingPreviewer class (i.e. self.requested_scaling_factor instead of the latter being a key of self.state), because updating a variable to a native value (a number, or a boolean) is an atomic operation. In Jython, though, it may not be--everything is an object, even numbers--and therefore it is best to access the state variables via synchronized methods, so that only one of the two threads does so at a time. It's also a good practice to access shared state under synchronization blocks, and this is a simple example illustrating how to do so. Notice near the end of the run method: the setProcessor is invoked via SwingUtilities.invokeAndWait method, using a lambda function (an anonymous function, which works here because all Jython functions implement the Runnable interface, required by invokeAndWait). This indirection is necessary because setProcessor will resize the window showing the image, and any operation that updates the UI must be run within the event dispatch thread to avoid problems. So yes: different methods of the ScalingPreviewer class are executed by different threads, and that's exactly the desirable setup. Once the user pushes the "OK" or "Cancel" buttons, we must invoke destroy(). Otherwise, the scheduled executor would keep running until quitting Fiji. The destroy() method requests its shutdown(), which will happen when the scheduled executor runs one more, and last, time.

|

# A reactive generic dialog that updates previews in the background

from ij.gui import GenericDialog

from ij import WindowManager as WM

from java.awt.event import AdjustmentListener, ItemListener

from java.util.concurrent import Executors, TimeUnit

from java.lang import Runnable

from javax.swing import SwingUtilities

from synchronize import make_synchronized

class ScalingPreviewer(AdjustmentListener, ItemListener, Runnable):

def __init__(self, imp, slider, preview_checkbox):

"""

imp: an ImagePlus

slider: a java.awt.Scrollbar UI element

preview_checkbox: a java.awt.Checkbox controlling whether to

dynamically update the ImagePlus as the

scrollbar is updated, or not.

"""

self.imp = imp

self.original_ip = imp.getProcessor().duplicate() # store a copy

self.slider = slider

self.preview_checkbox = preview_checkbox

# Scheduled preview update

self.scheduled_executor = Executors.newSingleThreadScheduledExecutor()

# Stored state

self.state = {

"restore": False, # whether to reset to the original

"requested_scaling_factor": 1.0, # last submitted request

"last_scaling_factor": 1.0, # last executed request

"shutdown": False, # to request terminating the scheduled execution

}

# Update, if necessary, every 300 milliseconds

time_offset_to_start = 1000 # one second

time_between_runs = 300

self.scheduled_executor.scheduleWithFixedDelay(self,

time_offset_to_start, time_between_runs, TimeUnit.MILLISECONDS)

@make_synchronized

def getState(self, *keys):

""" Synchronized access to one or more keys.

Returns a single value when given a single key,

or a tuple of values when given multiple keys. """

if 1 == len(keys):

return self.state[keys[0]]

return tuple(self.state[key] for key in keys)

@make_synchronized

def putState(self, key, value):

self.state[key] = value

def adjustmentValueChanged(self, event):

""" event: an AdjustmentEvent with data on the state of the scroll bar. """

preview = self.preview_checkbox.getState()

if preview:

if event.getValueIsAdjusting():

return # there are more scrollbar adjustment events queued already

self.scale()

def itemStateChanged(self, event):

""" event: an ItemEvent with data on what happened to the checkbox. """

if event.getStateChange() == event.SELECTED:

self.scale()

def reset(self):

""" Restore the original ImageProcessor """

self.putState("restore", True)

def scale(self):

self.putState("requested_scaling_factor", self.slider.getValue() / 100.0)

def run(self):

""" Execute the in-place scaling of the ImagePlus,

here playing the role of a costly operation. """

if self.getState("restore"):

print "Restoring original"

ip = self.original_ip

self.putState("restore", False)

else:

requested, last = self.getState("requested_scaling_factor", "last_scaling_factor")

if requested == last:

return # nothing to do

print "Scaling to", requested

new_width = int(self.original_ip.getWidth() * requested)

ip = self.original_ip.resize(new_width)

self.putState("last_scaling_factor", requested)

# Request updating the ImageProcessor in the event dispatch thread,

# given that the "setProcessor" method call will trigger

# a change in the dimensions of the image window

SwingUtilities.invokeAndWait(lambda: self.imp.setProcessor(ip))

# Terminate recurrent execution if so requested

if self.getState("shutdown"):

self.scheduled_executor.shutdown()

def destroy(self):

self.putState("shutdown", True)

def scaleImageUI():

gd = GenericDialog("Scale")

gd.addSlider("Scale", 1, 200, 100)

gd.addCheckbox("Preview", True)

# The UI elements for the above two inputs

slider = gd.getSliders().get(0) # the only one

checkbox = gd.getCheckboxes().get(0) # the only one

imp = WM.getCurrentImage()

if not imp:

print "Open an image first!"

return

previewer = ScalingPreviewer(imp, slider, checkbox)

slider.addAdjustmentListener(previewer)

checkbox.addItemListener(previewer)

gd.showDialog()

if gd.wasCanceled():

previewer.reset()

print "User canceled dialog!"

else:

previewer.scale()

previewer.destroy()

scaleImageUI()

|

||||||

|

Building user interfaces (UI): basic concepts In addition to using ImageJ/Fiji's built-in methods for creating user interfaces, java offers a large and rich library to make your own custom user interfaces. Here, I explain how to create a new window and populate it with buttons, text fields and text labels, and how to use them. A basic example: create a window with a single button in it, that says "Measure", and which runs the "Analyze - Measure" command, first checking whether any image is open. First we declare the measure function, which checks whether an image is open, and if so, runs the measuring command like you learned above. Then we create a JFrame, which is the window itself with the typical buttons for closing or minimizing it. Note we give the constructor the keyword argument "visible": this is jython's way of short-circuiting a lot of boiler plate code that would be required in java. When invoking a constructor, in jython, you can take any public method whose name starts with "set", such as "setVisible" in JFrame, and instead invoke it by using "visible" (with lowercase first letter) as a keyword argument in the constructor. This is the so-called bean property architecture of get and set methods, e.g. if a class has a "setValue" (with one argument) and "getValue" (without arguments, returning the value) methods, use "value" directly as a keyword argument in the constructor, or as a public field like frame.visible = True, which is the exact same as frame.setVisible(True). When the button is instantiated, we also pass a method to its constructor as a keyword argument: actionPerformed=measure. While the mechanism is similar to the bean property system above, it is not the same: jython searches the listener interfaces that the class methods can accept for registration. In this case, the JButton class provides a method (addActionListener) that accepts as argument an object implementing the ActionListener interface, which specifies a single method, actionPerformed, whose name matches the given keyword argument. Jython then automatically generates all the code necessary to make any calls to actionPerformed be redirected to our function measure. Notice that measure takes one argument: the event, in this case an ActionEvent whose properties (not explored here) define how and where and when was the button pushed (i.e. left mouse click, right mouse click, etc.). |

from javax.swing import JFrame, JButton, JOptionPane

from ij import IJ, WindowManager as WM

def measure(event):

""" event: the ActionEvent that tells us about the button having been clicked. """

imp = WM.getCurrentImage()

print imp

if imp:

IJ.run(imp, "Measure", "")

else:

print "Open an image first."

frame = JFrame("Measure", visible=True)

button = JButton("Area", actionPerformed=measure)

frame.getContentPane().add(button)

frame.pack()

|

||||||

|

Here is the same code, but explicitly creating a class that implements ActionListener, and then we add the listener to the button by invoking addActionListener. Jython frees us from having to explicitly write this boilerplate code, but we can, if we need to, for more complex situations. (This Measure class is simple, lacking even an explicit constructor--would be a method named __init__, by convention in python. So the default constructor Measure() is invoked without arguments.) |

from javax.swing import JFrame, JButton, JOptionPane

from ij import IJ, WindowManager as WM

from java.awt.event import ActionListener

class Measure(ActionListener):

def actionPerformed(self, event):

""" event: the ActionEvent that tells us about the button having been clicked. """

imp = WM.getCurrentImage()

print imp

if imp:

IJ.run(imp, "Measure", "")

else:

print "Open an image first."

frame = JFrame("Measure", visible=True)

button = JButton("Area")

button.addActionListener(Measure())

frame.getContentPane().add(button)

frame.pack()

|

||||||

|

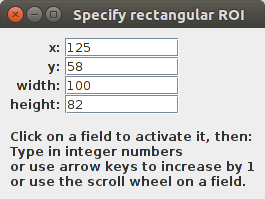

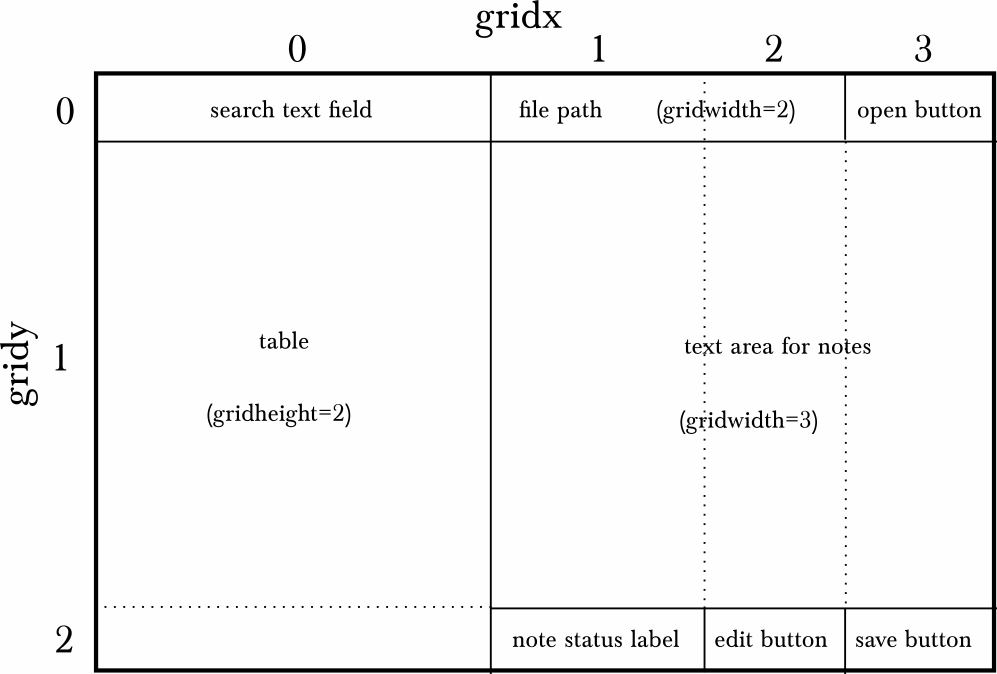

Create a UI: open a window to edit the position and dimensions of an ROI Here I illustrate how to create a user interface to accomplish a task that requires user input. Namely, how to edit the position (x, y) and dimensions (width, height) of an ImageJ rectangular ROI by typing in the precise integer values, or using arrows to increase/decrease the existing values, or using the scroll wheel to do the same. First, we define a RoiMaker class that extends KeyAdapter and implements the MouseWheelListener interface. The KeyAdapter class is a convenience class that implements all methods of the KeyListener interface, but where none of the methods do anything, sparing us from having to implement methods without a body. In RoiMaker, the constructor takes the list of all text fields to listen to (textfields: a total of four, for x, y, width, height of the ROI), and an index (ranging from 0 to 3) over that list, to determine which field to listen to. The parse method gets the text in the field and attempts to parse it as an integer. When it fails, the ROI is not updated, and the field is left painted with a red background. The Color returns back upon editing the value to a valid integer (actually, at every push of a key it will get set to white, and it is not set to red when parsing to an integer is successful). The update method attempts to parse the value in the text field, and can increment it (by 1 or -1, from the arrow keys), and if successful, updates the ROI on the active image. The keyReleased method overrides the homonimous method form the KeyAdapter, and implements the logic: if an arrow key is typed, increase or decrease by 1, accordingly; if any key is typed, just call update to parse the value and modify the ROI on the active image. Finally, the mouseWheelMoved method is from the MouseWheelListener interface, and is used to respond to events from the mouse wheel that happen over the text field. The wheel rotation is a positive or negative integer value, depending on the direction of rotation. Then we create the TextFieldUpdater class, which implements the RoiListener interface. Its only method roiModified provides an ImagePlus instance (imp) as argument, that we could use (but here we don't) to check whether a response is needed. Here, we ignore imp and respond no matter which value of imp is provided. We get the bounds from the ROI and update the content of the text fields. In this way, if the user manually drags the rectangular ROI around, or pulls its handles to change its dimensions or location, the textfields' values are updated. The CloseControl class extends WindowAdapter (similarly to how KeyAdapter implements empty methods for all methods of the KeyListener interface), and we implement only windowClosing: a method invoked when a window is closing, and which enables us to prevent its closing (by consuming the event), or to make it happen (by calling dispose() on the source of the event, which is the JFrame object (see below) that represents the whole window. Notice we ask the user, using a JOptionPane, to confirm whether the window should be closed--it is all too common to close a window by accidentally clicking the wrong button. With these classes in place, we declare the function specifyRoiUI which creates the window with text fields for manipulating the ROI position and dimensions, with all the event listeners that we declared above. The major abstraction here is that of a panel, with the class JPanel. A panel, like the panel in a figure of a scientific paper, is merely a bit of space: an area on the screen over which we define some behavior. How the content of the panel is laid out is managed here by a GridBagLayout: the most powerful--and to me, most intuitive and predictable--layout manager available in the java libraries. The GridBagLayout works as follows: for every UI element (a piece of text, a text field for entering text, a button, a panel (i.e. nested, a panel occupying a cell of another panel), etc.) to add to the panel, a set of constraints govern how the element is rendered (where, and in which dimensions). As its name indicates, the GridBagLayout is a grid, with each cell of the grid taking potentially different dimensions--but with all cells of the saw row having the same height, and all cells of the same column having the same width. The GridBagConstraints (here imported as GBC for brevity, with the variable gc pointing to an instance) contains a bunch of public, editable fields; when setting the constraints for an UI element, these are copied, so the gc object can continue to be edited, which facilitates e.g. sharing all constraints with the UI element next to the last added, except for e.g. its position in the X axis (i.e. by increasing the value of gc.gridx). In the for loop over the ["x", "y", "width", "height"] properties of a ROI's bounds, first a new JLabel is created, and then the value (read with the built-in function getattr to read an object's field by name) is used to create a new JTextField that will be used to edit it. Notice how the gc layout constraints are edited for each element (the label and the textfield) prior to adding them to the panel. The sequence is always the same: (1) create the UI element (e.g. a JTextField); (2) having adjusted the constraints (gc), call gb.setConstraints; and (3) add the UI element to the panel. Finally, a RoiMaker listener is created for each textfield, in order to respond to user input and update the ROI accordingly on the active image. Whenever a keyboard event or a mouse event occurs--actions initiated by the user--, the UI element will invoke the appropriate method of our listener, giving us the opportunity to react to the user's input. At the bottom, we add a doc to instruct the user on how to control the ROI position and dimensions using the keyboard and the mouse scroll wheel. Notice how we use gc.gridwidth = 2, to make the text span two horizontally adjacent cells instead of just one, and also we set gc.gridx = 0 to starting counting 2 cells from the first one, at index 0. Then, we instantiate a TextFieldUpdater (which implements RoiListener) and register it via Roi.addRoiListener, so that ImageJ will notify our class of any changes to an image's ROI, with our class then updating the text fields with the new values. E.g. if you were to drag the ROI, the first two fields, for the x, y position, would be updated. All that is left now is to define a JFrame that will contain our panel and offer buttons to e.g. close it or minimize it. The CloseControl class (see above) will manage whether the frame is closed (or not) upon clicking on its close button, and for that to work, we disable automatic closing first. Finally, we invoke the specifyRoiUI function to set it all up and show us our window. As final remarks, notice you could merely use Fiji's "Edit - Selection - Specify..." menu, which provides this functionality although not as interactive (it's a modal dialog, meaning, once open, no other window will respond to any input, and the dialog will remain on top always until closed). And notice as well that the ROI will be set on the active image: if the latter changes, the ROI--if any--of the active image will be replaced by the ROI specified by the position and dimension fields of this UI.

|

# Albert Cardona 2019-06-20

# Based on code written previously for lib/isoview_ui.py

from ij import IJ, ImagePlus, ImageListener

from ij.gui import RoiListener, Roi

from java.awt.event import KeyEvent, KeyAdapter, MouseWheelListener, WindowAdapter

from java.awt import Color, Rectangle, GridBagLayout, GridBagConstraints as GBC

from javax.swing import JFrame, JPanel, JLabel, JTextField, BorderFactory, JOptionPane

class RoiMaker(KeyAdapter, MouseWheelListener):

def __init__(self, textfields, index):

""" textfields: the list of 4 textfields for x,y,width and height.

index: of the textfields list, to chose the specific textfield to listen to. """

self.textfields = textfields

self.index = index

def parse(self):

""" Read the text in textfields[index] and parse it as a number.

When not a number, fail gracefully, print the error and paint the field red. """

try:

return int(self.textfields[self.index].getText())

except:

print "Can't parse integer from text: '%s'" % self.textfields[self.index].getText()

self.textfields[self.index].setBackground(Color.red)

def update(self, inc):

""" Set the rectangular ROI defined by the textfields values onto the active image. """

value = self.parse()

if value:

self.textfields[self.index].setText(str(value + inc))

imp = IJ.getImage()

if imp:

imp.setRoi(Roi(*[int(tf.getText()) for tf in self.textfields]))

def keyReleased(self, event):

""" If an arrow key is pressed, increase/decrese by 1.

If text is entered, parse it as a number or fail gracefully. """

self.textfields[self.index].setBackground(Color.white)

code = event.getKeyCode()

if KeyEvent.VK_UP == code or KeyEvent.VK_RIGHT == code:

self.update(1)

elif KeyEvent.VK_DOWN == code or KeyEvent.VK_LEFT == code:

self.update(-1)

else:

self.update(0)

def mouseWheelMoved(self, event):

""" Increase/decrese value by 1 according to the direction

of the mouse wheel rotation. """

self.update(- event.getWheelRotation())

class TextFieldUpdater(RoiListener):

def __init__(self, textfields):

self.textfields = textfields

def roiModified(self, imp, ID):

""" When the ROI of the active image changes, update the textfield values. """

if imp != IJ.getImage():

return # ignore if it's not the active image

roi = imp.getRoi()

if not roi or Roi.RECTANGLE != roi.getType():

return # none, or not a rectangle ROI

bounds = roi.getBounds()

if 0 == roi.getBounds().width + roi.getBounds().height:

bounds = Rectangle(0, 0, imp.getWidth(), imp.getHeight())

self.textfields[0].setText(str(bounds.x))

self.textfields[1].setText(str(bounds.y))

self.textfields[2].setText(str(bounds.width))

self.textfields[3].setText(str(bounds.height))

class CloseControl(WindowAdapter):

def __init__(self, roilistener):

self.roilistener = roilistener

def windowClosing(self, event):

answer = JOptionPane.showConfirmDialog(event.getSource(),

"Are you sure you want to close?",

"Confirm closing",

JOptionPane.YES_NO_OPTION)

if JOptionPane.NO_OPTION == answer:

event.consume() # Prevent closing

else:

Roi.removeRoiListener(self.roilistener)

event.getSource().dispose() # close the JFrame

def specifyRoiUI(roi=Roi(0, 0, 0, 0)):

# A panel in which to place UI elements

panel = JPanel()

panel.setBorder(BorderFactory.createEmptyBorder(10,10,10,10))

gb = GridBagLayout()

panel.setLayout(gb)

gc = GBC()

bounds = roi.getBounds() if roi else Rectangle()

textfields = []

roimakers = []

# Basic properties of most UI elements, will edit when needed

gc.gridx = 0 # can be any natural number

gc.gridy = 0 # idem.

gc.gridwidth = 1 # when e.g. 2, the UI element will occupy

# two horizontally adjacent grid cells

gc.gridheight = 1 # same but vertically

gc.fill = GBC.NONE # can also be BOTH, VERTICAL and HORIZONTAL

for title in ["x", "y", "width", "height"]:

# label

gc.gridx = 0

gc.anchor = GBC.EAST

label = JLabel(title + ": ")

gb.setConstraints(label, gc) # copies the given constraints 'gc',

# so we can modify and reuse gc later.

panel.add(label)

# text field, below the title

gc.gridx = 1

gc.anchor = GBC.WEST

text = str(getattr(bounds, title)) # same as e.g. bounds.x, bounds.width, ...

textfield = JTextField(text, 10) # 10 is the size of the field, in digits

gb.setConstraints(textfield, gc)

panel.add(textfield)

textfields.append(textfield) # collect all 4 created textfields for the listeners

# setup ROI and text field listeners

# (second argument is the index of textfield in the list of textfields)

listener = RoiMaker(textfields, len(textfields) -1)

roimakers.append(listener)

textfield.addKeyListener(listener)

textfield.addMouseWheelListener(listener)

# Position next ROI property in a new row

# by increasing the Y coordinate of the layout grid

gc.gridy += 1

# User documentation (uses HTML to define line breaks)

doc = JLabel("

|

||||||

|

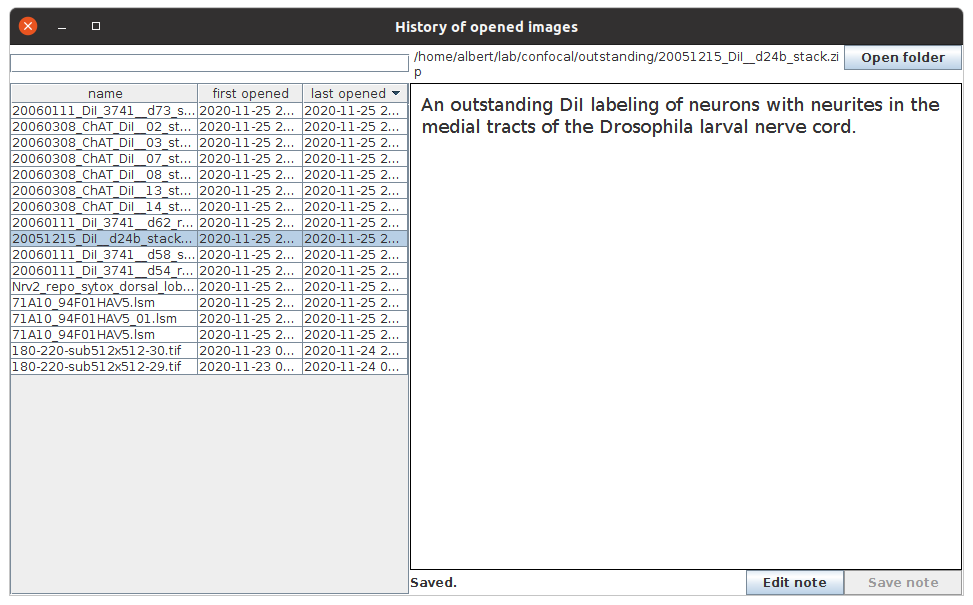

Create a UI: searchable, tabulated view of file paths

In a research project running for some years the number of image files can grow to large numbers, often hundreds of thousands or milions. Because of the sheer number, these data sets are often stored in fileshares, mounted locally in your workstation via SMB or NFS. Often, searching for specific files in these data sets is slow, because the file system is slow or some other reason. To overcome this limitation, here we first run a shell one-liner script that writes all file paths into a text file, at one file path per line. Whenever the data set grows or changes, you'll have to re-run it:

$ find . -type f -printf "%p\n" > ~/Desktop/list.txt

To run this python script, either just run it, and an OpenDialog will ask you for the text file with the file path list, or, update the txt_file variable to point to the file with the file paths. Once open, there's a search field at the top, and a table showing all file paths below. If the paths are relative (i.e. don't start with a '/' in *nix systems), fill in the text field at the bottom with the base path (the path to the directory that contains all the relative paths). Then, search: type in any text and push return. The listing of file paths will be reduced to those that contain the text. Alternatively, use a leading '/' in your text field to use regular expressions. Once the desired file is listed, double-click it: Fiji will open it, just as if you had drag and dropped it onto the Fiji/ImageJ toolbar, or had opened via "File - Open". The script first defines a TableModel, which is a class that extends AbstractTableModel. A model, in user interface parlance, is actually the data itself: a data structure that organizes the data in a predetermined way, following an interface contract (here, the homonymously named TableModel from the javax.swing package for building user interfaces). If you read the methods of class TableModel you'll notice methods for getting the number of columns and rows, and retrieving the data from a particular table cell, by index. All of these are methods required by the TableModel interface. I've added an additional method, filter, which, when invoked with a string as argument (named regex, but which can be plain text or, when starting with a '/', it's interpreted as a regular expression) will then shorten the list of stored file paths self.paths to merely those that match. The filtering is done in parallel using the Collections.parallelStream method that all java collections, including ArrayList (self.paths is an instance of ArrayList) implement. The class RowClickListener implements a listener for the mouse click on a row, obviously. This listener will later be added to the list of listeners of the table. The class EnterListener does just that for the search field. The class Closing is also a listener, but one that extends the WindowAdapter class to spare us from having to implement methods from the WindowListener interface that we won't use. This is a common pattern in java user interface libraries: a listener interface declares various methods, and then an adapter class that implements that interface exists, with empty implementations for all of the interface's methods, for convenience. The function makeUI binds it all together: creates the table, sets it up in a frame that also contains the regex_field where we'll type the search string, and the base_path_field for the base path string if needed. Then various JPanel organize these UI elements and a simple BoxLayout organizes where they render (see also another version of this script using GridBagLayout). Then instances of the various listeners are added to the table and fields. The launch function is a convenient wrapper for a run function that invokes makeUI with a given table model. Later, this isused to show the user interface from the event dispatch thread via SwingUtilities.invokeLater, as required for all UI-editing operations of the javax.swing library (see the "Swing's Threading Policy" at the bottom of this documentation). Turns out that all jython functions implement Runnable, the type expected by the sole argument of invokeLater, so instead of creating yet another class just to implement it, we use run instead. |

# A graphical user interface to keep track of files opened with Fiji

# and with the possibility of taking notes for each,

# which are persisted in a CSV file.

#

# Select a row to see its file path and note, if any.

# Double-click the file path to open the image corresponding to the selected table row.

# Click the "Open folder" to open the containing folder.

# Click the "Edit note" to start editing it, and "Save note" to sync to a CSV file.

#

# Albert Cardona 2020-11-22

from javax.swing import JPanel, JFrame, JTable, JScrollPane, JButton, JTextField, \

JTextArea, ListSelectionModel, SwingUtilities, JLabel, BorderFactory

from javax.swing.table import AbstractTableModel

from java.awt import GridBagLayout, GridBagConstraints, Dimension, Font, Insets, Color

from java.awt.event import KeyAdapter, MouseAdapter, KeyEvent, ActionListener, WindowAdapter

from javax.swing.event import ListSelectionListener

from java.lang import Thread, Integer, String, System

import os, csv, re

from datetime import datetime

from ij import ImageListener, ImagePlus, IJ, WindowManager

from ij.io import OpenDialog

from java.util.concurrent import Executors, TimeUnit

from java.util.concurrent.atomic import AtomicBoolean

from java.io import File

# EDIT here: where you want the CSV file to live.

# By default, lives in your user home directory as a hidden file.

csv_image_notes = os.path.join(System.getProperty("user.home"),

".fiji-image-notes.csv")

# Generic read and write CSV functions

def openCSV(filepath, header_length=1):

with open(filepath, 'r') as csvfile:

reader = csv.reader(csvfile, delimiter=',', quotechar="\"")

header_rows = [reader.next() for i in xrange(header_length)]

rows = [columns for columns in reader]

return header_rows, rows

def writeCSV(filepath, header, rows):

""" filepath: where to write the CSV file

header: list of header titles

rows: list of lists of column values

Writes first to a temporary file, and upon successfully writing it in full,

then rename it to the target filepath, overwriting it.

"""

with open(filepath + ".tmp", 'wb') as csvfile:

w = csv.writer(csvfile, delimiter=',', quotechar="\"",

quoting=csv.QUOTE_NONNUMERIC)

if header:

w.writerow(header)

for row in rows:

w.writerow(row)

# when written in full, replace the old one if any

os.rename(filepath + ".tmp", filepath)

# Prepare main data structure: a list (rows) of lists (columns)

# Load the CSV file if it exists, otherwise use an empty data structure

if os.path.exists(csv_image_notes):

header_rows, entries = openCSV(csv_image_notes, header_length=1)

header = header_rows[0]

else:

header = ["name", "first opened", "last opened", "filepath", "notes"]

entries = []

# The subset of entries that are shown in the table (or all)

table_entries = entries

# Map of file paths vs. index of entries

image_paths = {row[3]: i for i, row in enumerate(entries)}

# A model (i.e. an interface to access the data) of the JTable listing all opened image files

class TableModel(AbstractTableModel):

def getColumnName(self, col):

return header[col]

def getColumnClass(self, col): # for e.g. proper numerical sorting

return String # all as strings

def getRowCount(self):

return len(table_entries)

def getColumnCount(self):

return len(header) -2 # don't show neither the full filepath nor the notes in the table

def getValueAt(self, row, col):

return table_entries[row][col]

def isCellEditable(self, row, col):

return False # none editable

def setValueAt(self, value, row, col):

pass # none editable

# Create the GUI: a 3-column table and a text area next to it

# to show and write notes for any selected row, plus some buttons and a search field

all = JPanel()

all.setBackground(Color.white)

gb = GridBagLayout()

all.setLayout(gb)

c = GridBagConstraints()

# Top-left element: a text field for filtering rows by regular expression match

c.gridx = 0

c.gridy = 0

c.anchor = GridBagConstraints.CENTER

c.fill = GridBagConstraints.HORIZONTAL

search_field = JTextField("")

gb.setConstraints(search_field, c)

all.add(search_field)

# Bottom left element: the table, wrapped in a scrollable component

table = JTable(TableModel())

table.setSelectionMode(ListSelectionModel.SINGLE_SELECTION)

#table.setCellSelectionEnabled(True)

table.setAutoCreateRowSorter(True) # to sort the view only, not the data in the underlying TableModel

c.gridx = 0

c.gridy = 1

c.anchor = GridBagConstraints.NORTHWEST

c.fill = GridBagConstraints.BOTH # resize with the frame

c.weightx = 1.0

c.gridheight = 2

jsp = JScrollPane(table)

jsp.setMinimumSize(Dimension(400, 500))

gb.setConstraints(jsp, c)

all.add(jsp)

# Top component: a text area showing the full file path to the image in the selected table row

c.gridx = 1

c.gridy = 0

c.gridheight = 1

c.gridwidth = 2

path = JTextArea("")

path.setEditable(False)

path.setMargin(Insets(4, 4, 4, 4))

path.setLineWrap(True)

path.setWrapStyleWord(True)

gb.setConstraints(path, c)

all.add(path)

# Top-right button to open the folder containing the image in the selected table row

c.gridx = 3

c.gridy = 0

c.gridwidth = 1

c.fill = GridBagConstraints.NONE

c.weightx = 0.0 # let the previous ('path') component stretch as much as possible

open_from_folder = JButton("Open folder")

gb.setConstraints(open_from_folder, c)

all.add(open_from_folder)

# Middle-right textarea showing the text of a note associated with the selected table row image

c.gridx = 1

c.gridy = 1

c.weighty = 1.0

c.gridwidth = 3

c.fill = GridBagConstraints.BOTH

textarea = JTextArea()

textarea.setBorder(BorderFactory.createCompoundBorder(